PROMPT2PRINT

End-to-end pipeline converting text prompts into 3D-printed polymer panels through AI and robotic fabrication

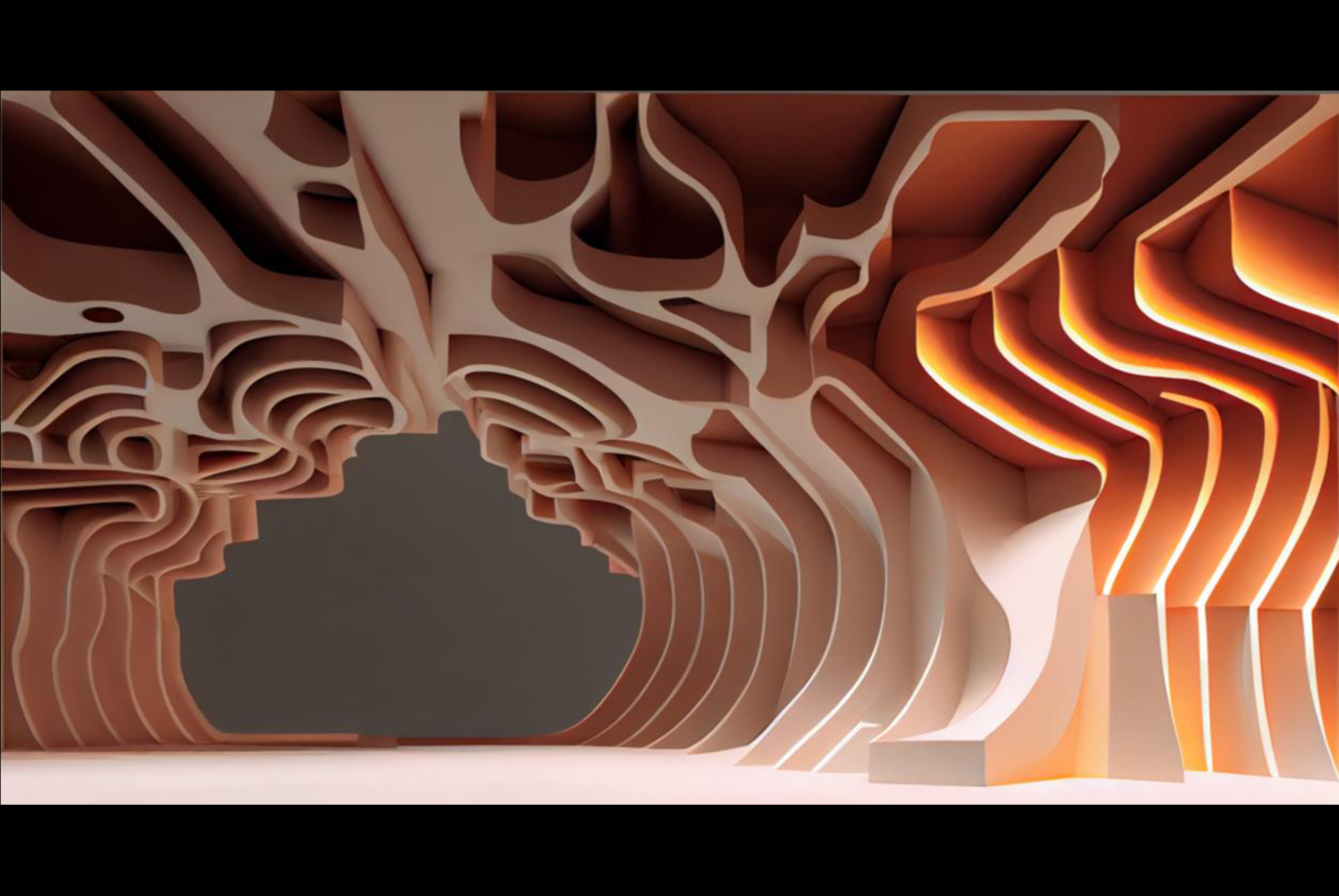

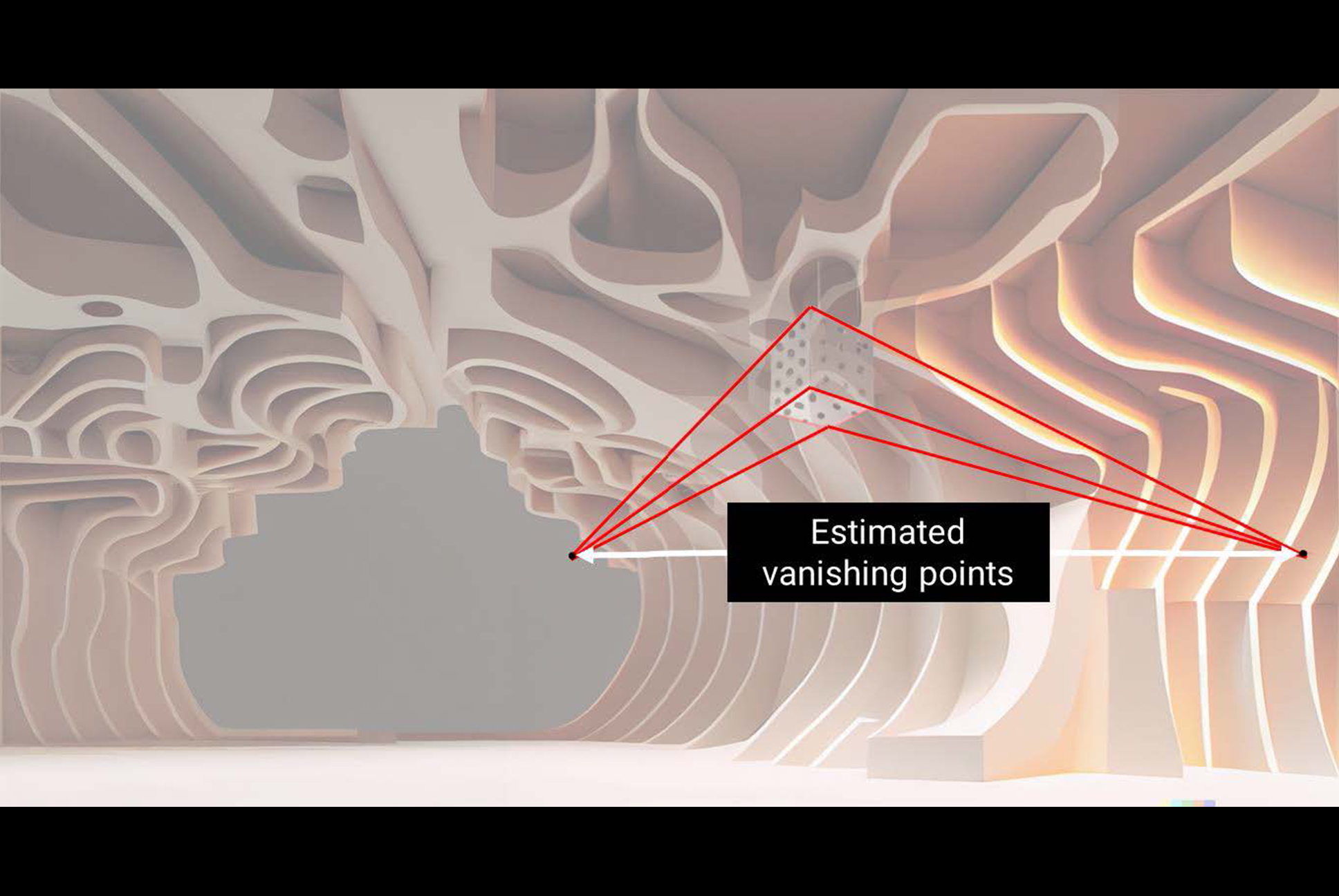

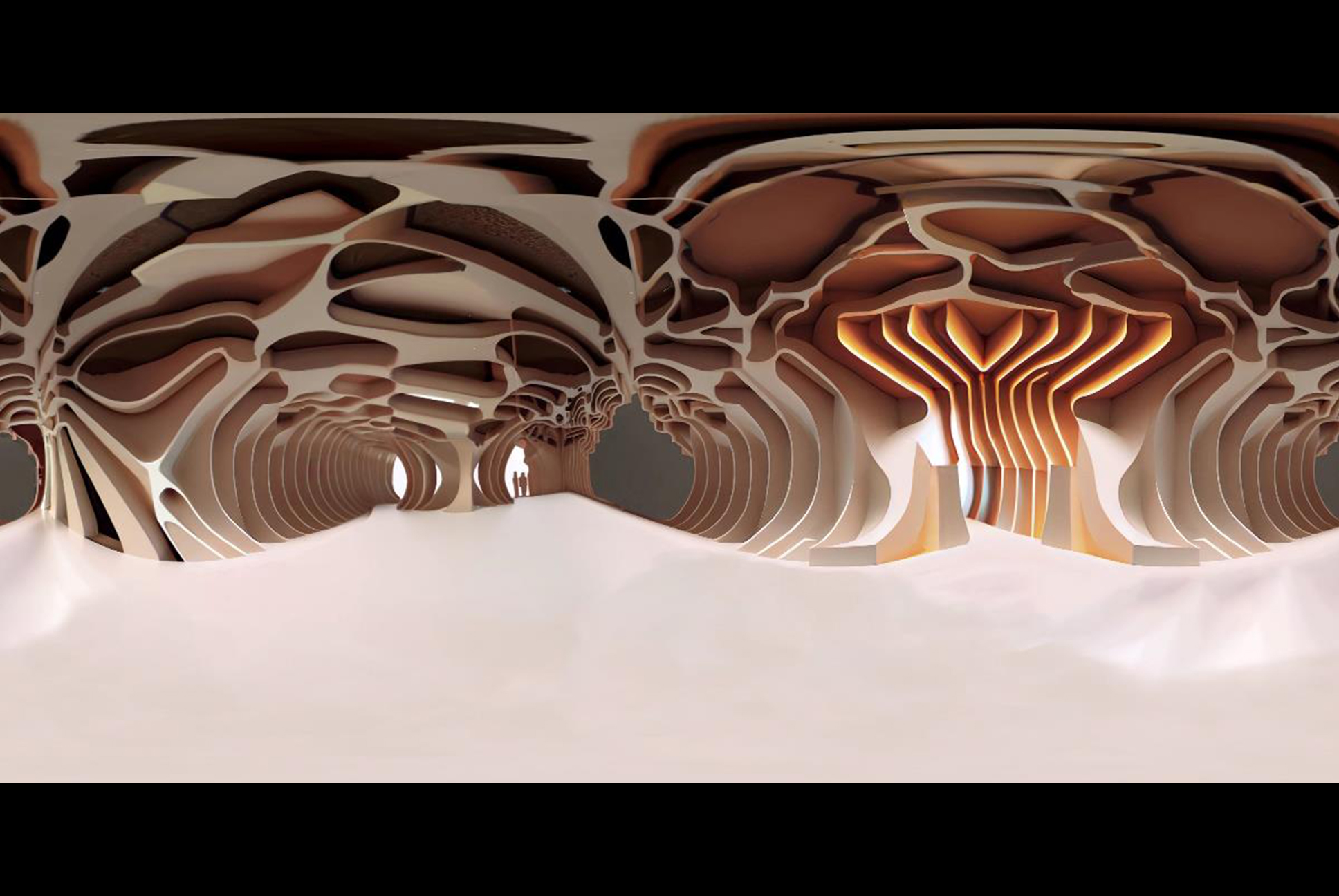

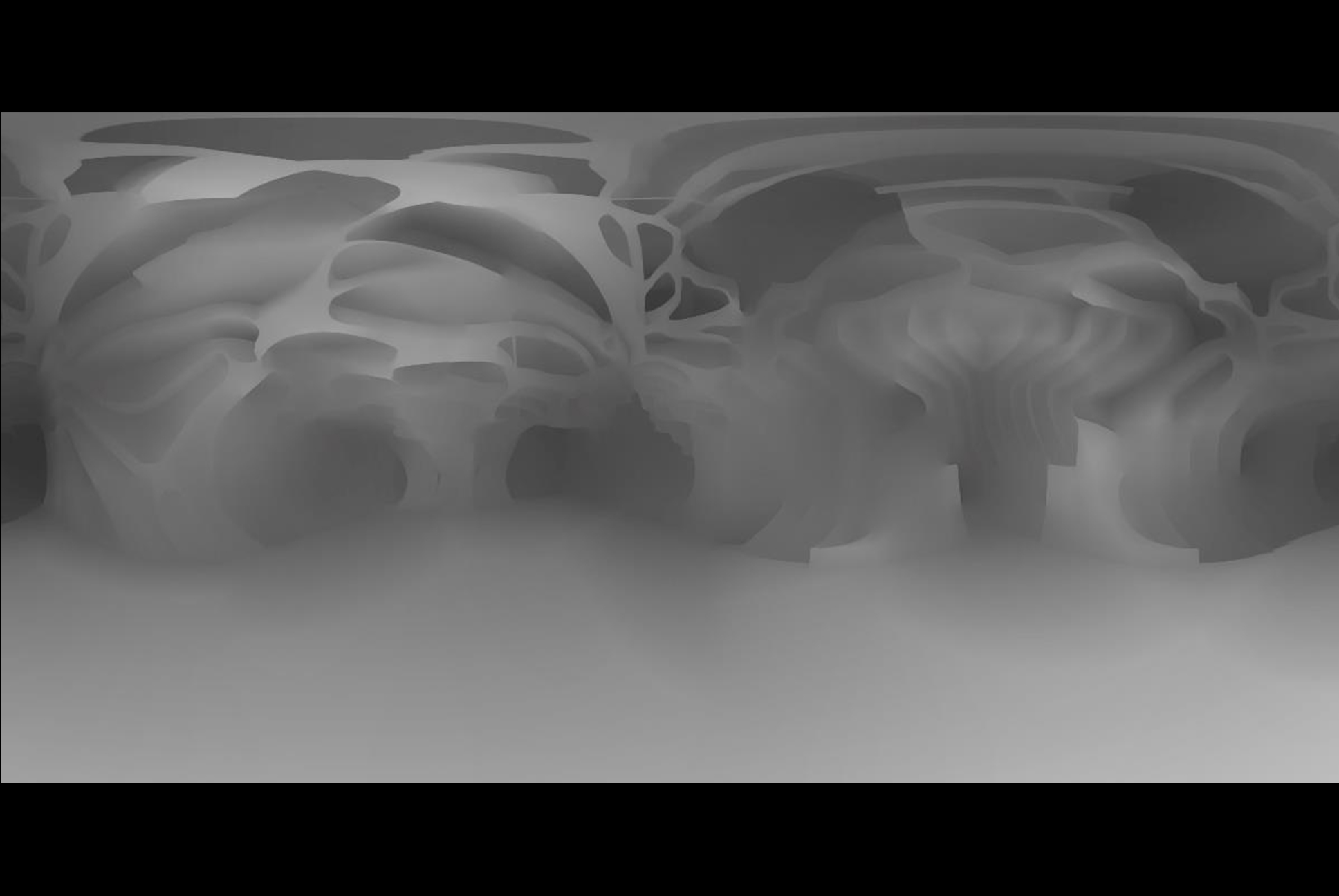

The project shows that a single workflow turns a text prompt into a finished polymer panel. A diffusion model generates a panorama, depth inference builds a 3-D mesh, parametric scripts split the mesh into printable tiles, and two robots reshape pins and extrude polycarbonate. The test pavilion ceiling proves that language alone can initiate and steer digital fabrication.

Designers seek faster ways to move from concept to built form.

The goal here is to prove that language-based image synthesis, depth recovery, and robotic making can operate as one chain.

The challenge lies in keeping data consistent while shifting across media: text, 2D image, 3D mesh, toolpaths, and finally, material deposition.

A diffusion model creates a 360° image from a verbal brief.

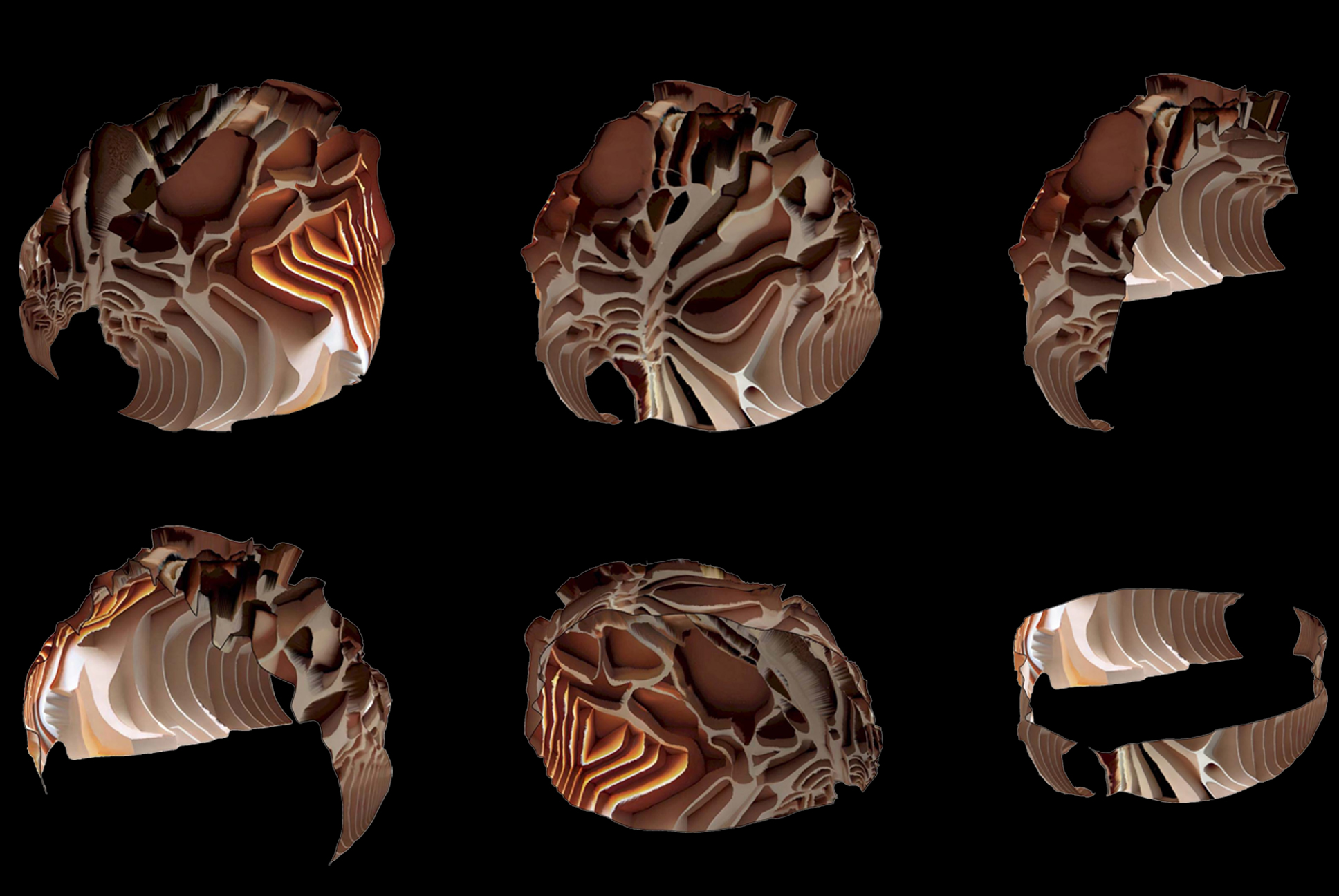

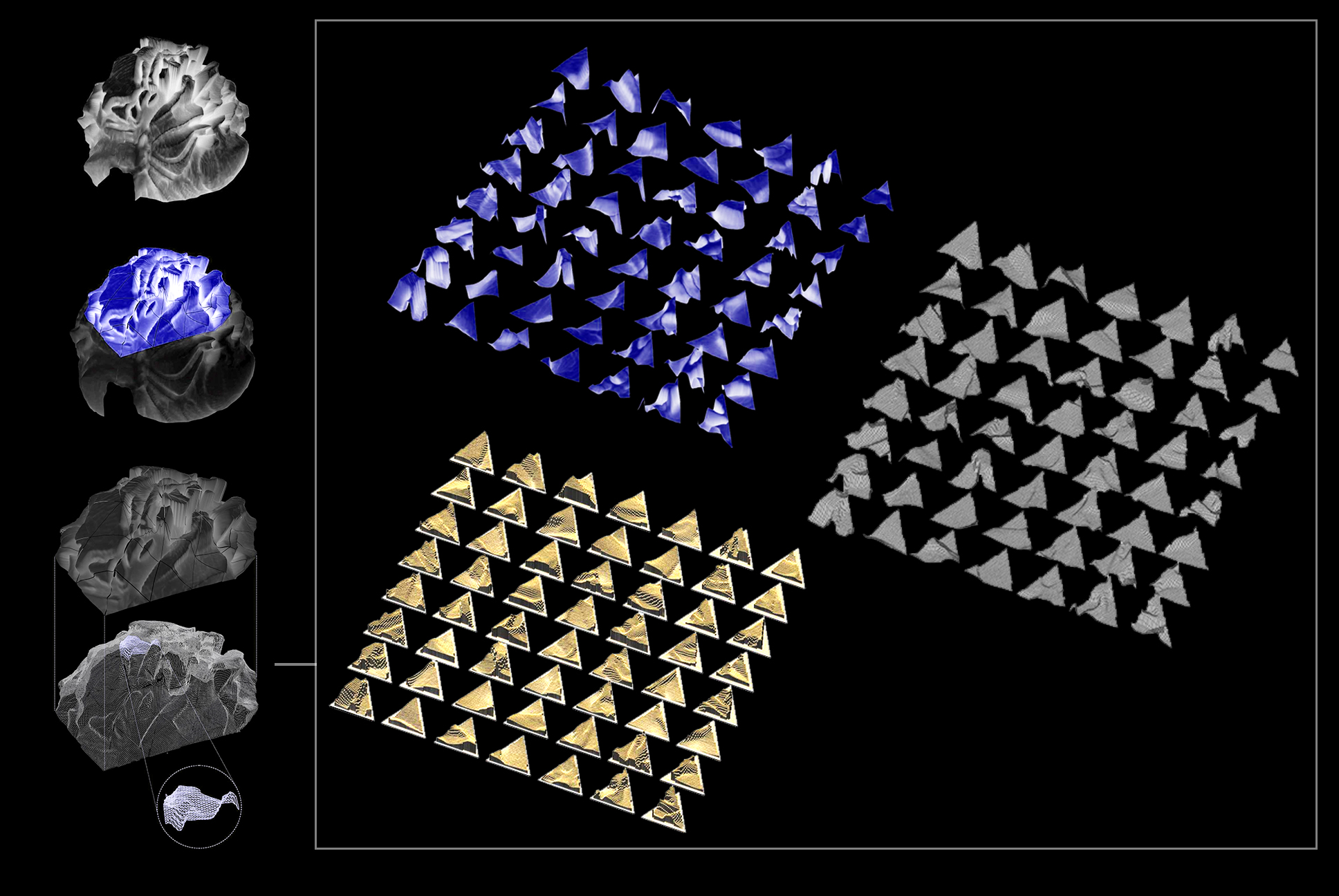

A monocular network produces a spherical depth map, converted to a mesh. The surface is divided into fifty-six triangular panels.

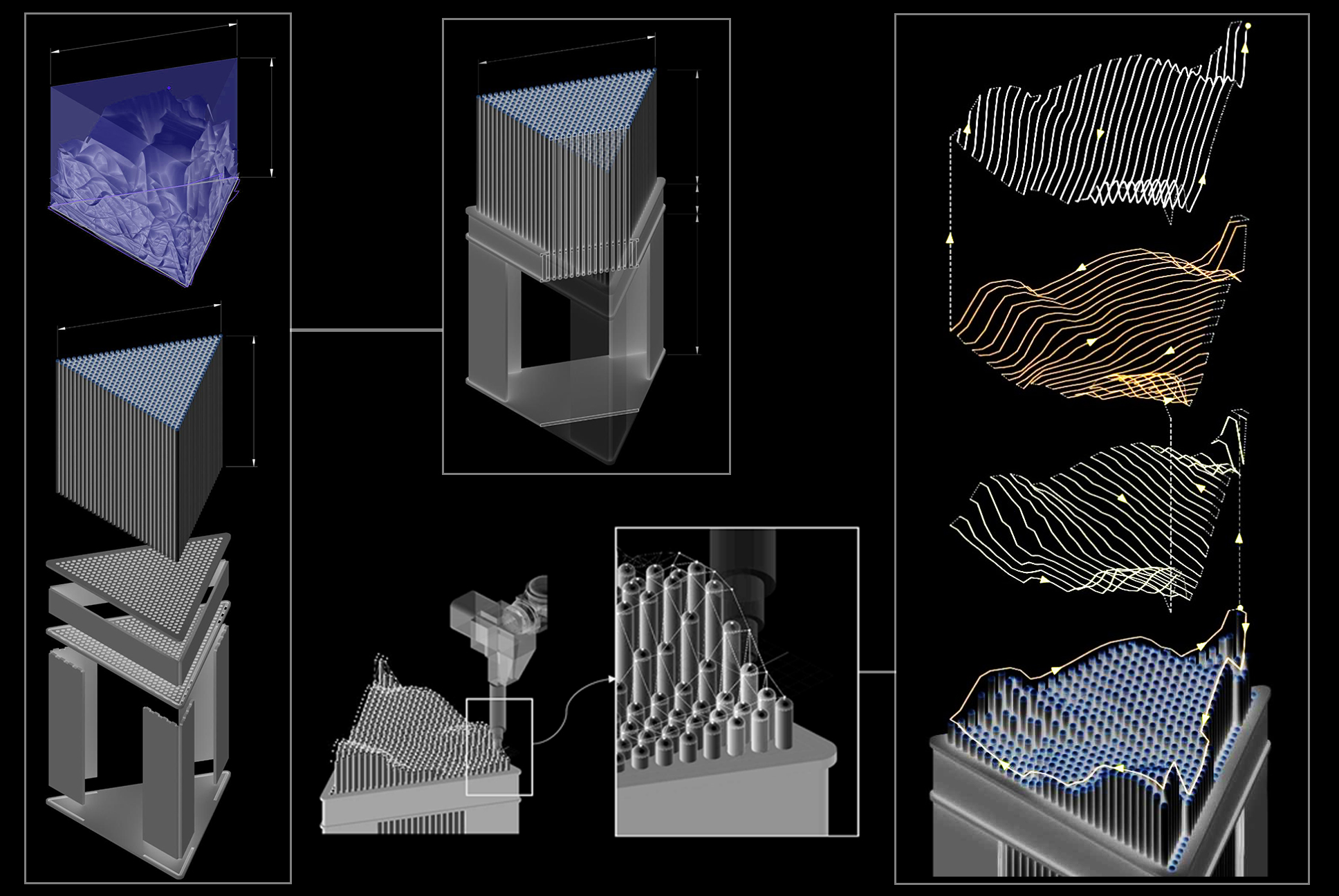

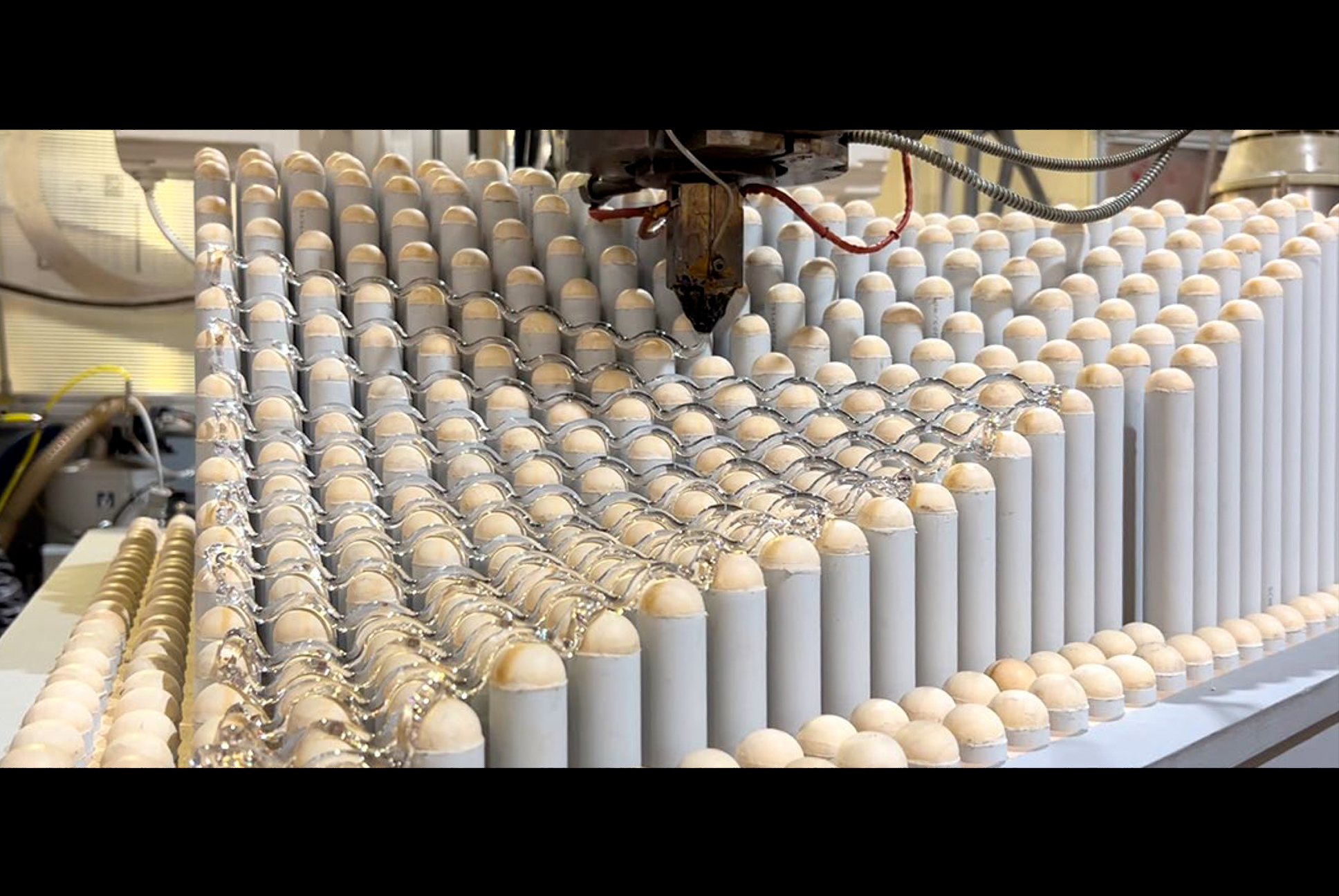

An adaptive bed of 496 sliding pins is programmed to match each panel’s profile; a second robot extrudes polycarbonate along offset paths, adjusting speed to height changes.

A full-scale polycarbonate panel is produced directly from the computed toolpaths.

The robot reshapes the pin bed, follows the prescribed trajectories, and deposits the material without collisions, confirming the pipeline’s capacity to translate a text prompt into a tangible, complex surface.

space

MATERIALS

Polycarbonate | Polychloride (PC) | Digital Materials

PROCESSES

Screw Extrusion | Reconfigurable Pin Tooling | Robotic 3D Printing | Robotic-Assisted Pin Arrangement | Artificial Intelligence & Machine Learning

DESIGNS

Aligned Sectioning | AI Image Generation

space

ROLES

.

STAKEHOLDERS

LOCATION

.

YEAR

2023

space