HOLOGRIND

AR-guided robotic grinding system for customizable surface patterns on metal sheets

This project develops an interactive robotic grinding system for custom surface treatment in architecture and construction. The approach combines human gesture input, augmented reality, and robotic automation to generate grinding patterns on metal sheets. By using real-time spatial interaction and programmable filters, the system enhances the predictability and creativity of the fabrication process. A 1×3 meter surface composed of six panels is used to demonstrate the method. The project suggests new directions for human-machine collaboration in design-to-fabrication workflows, with possible extensions to other materials and contexts.

While many robotic processes have improved speed and consistency, subtractive tasks like surface grinding remain underdeveloped due to variability in outcomes and dependence on manual labor. This project addresses this gap by proposing an interactive system that enables users to design and control robotic grinding paths in real time using gesture recognition and augmented reality. The aim is to combine the flexibility of manual intervention with the repeatability of robotic motion to produce patterned surfaces on metal sheets. . Previous work in human-machine collaboration has emphasized additive fabrication; this research focuses instead on subtractive, texture-generating processes.

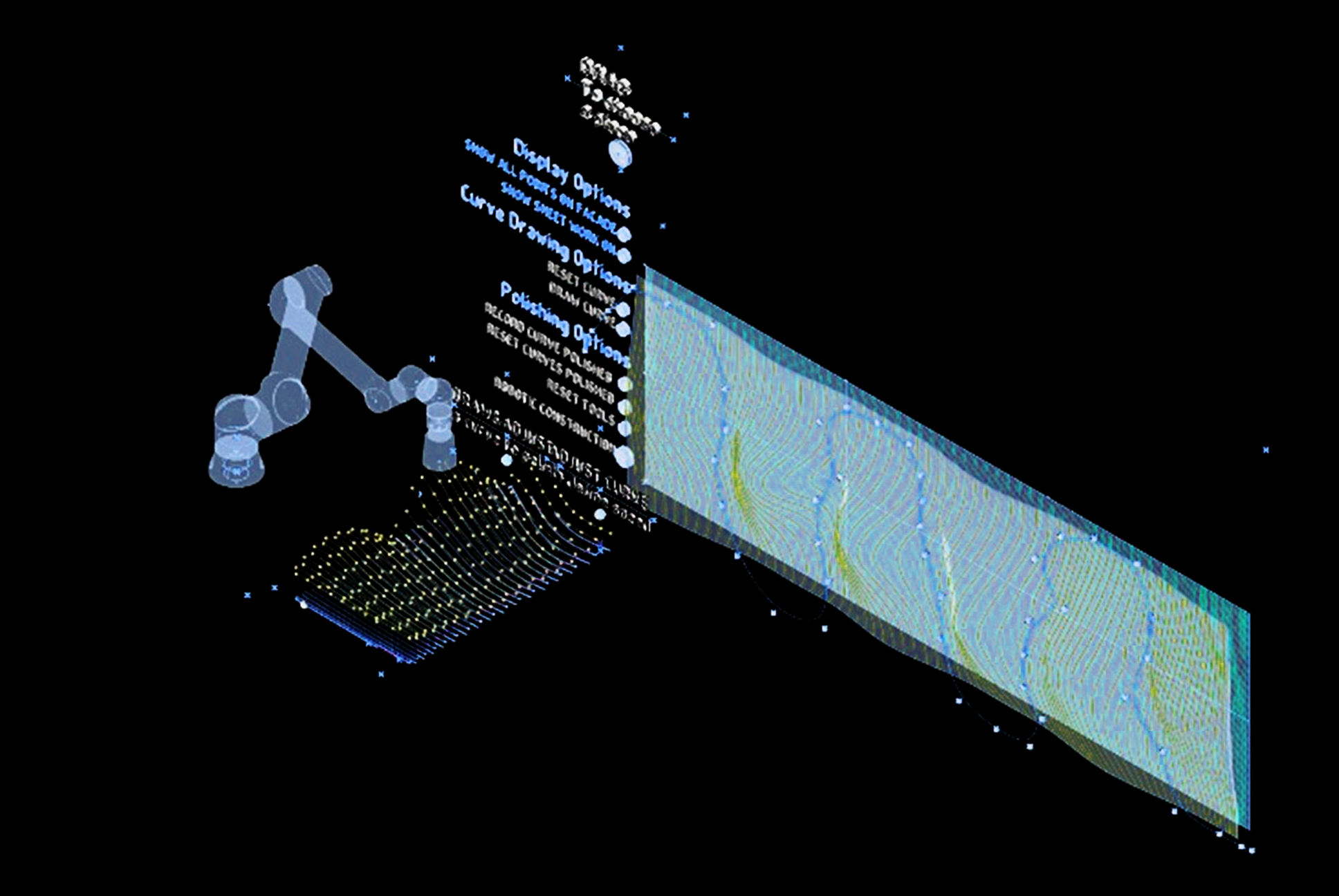

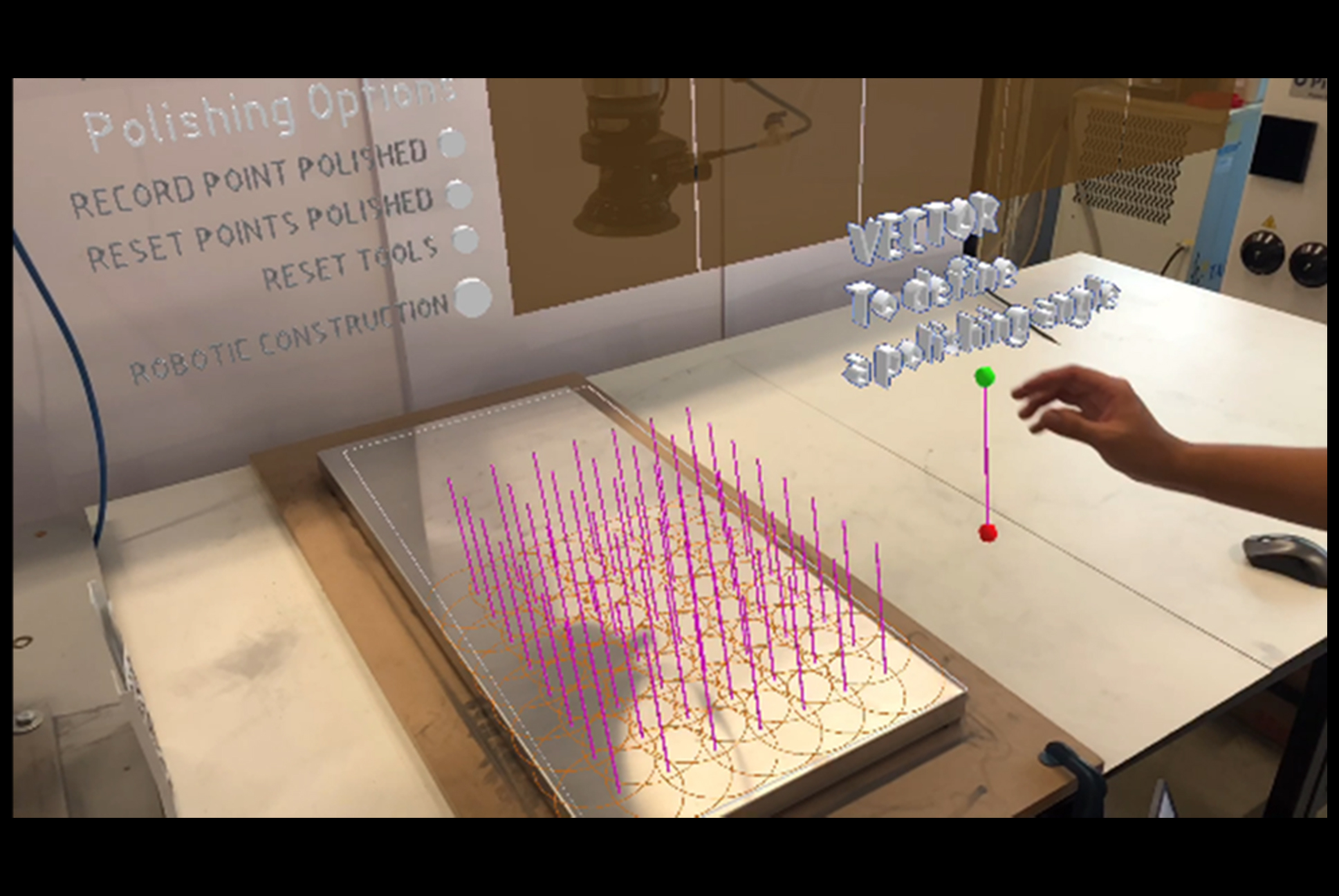

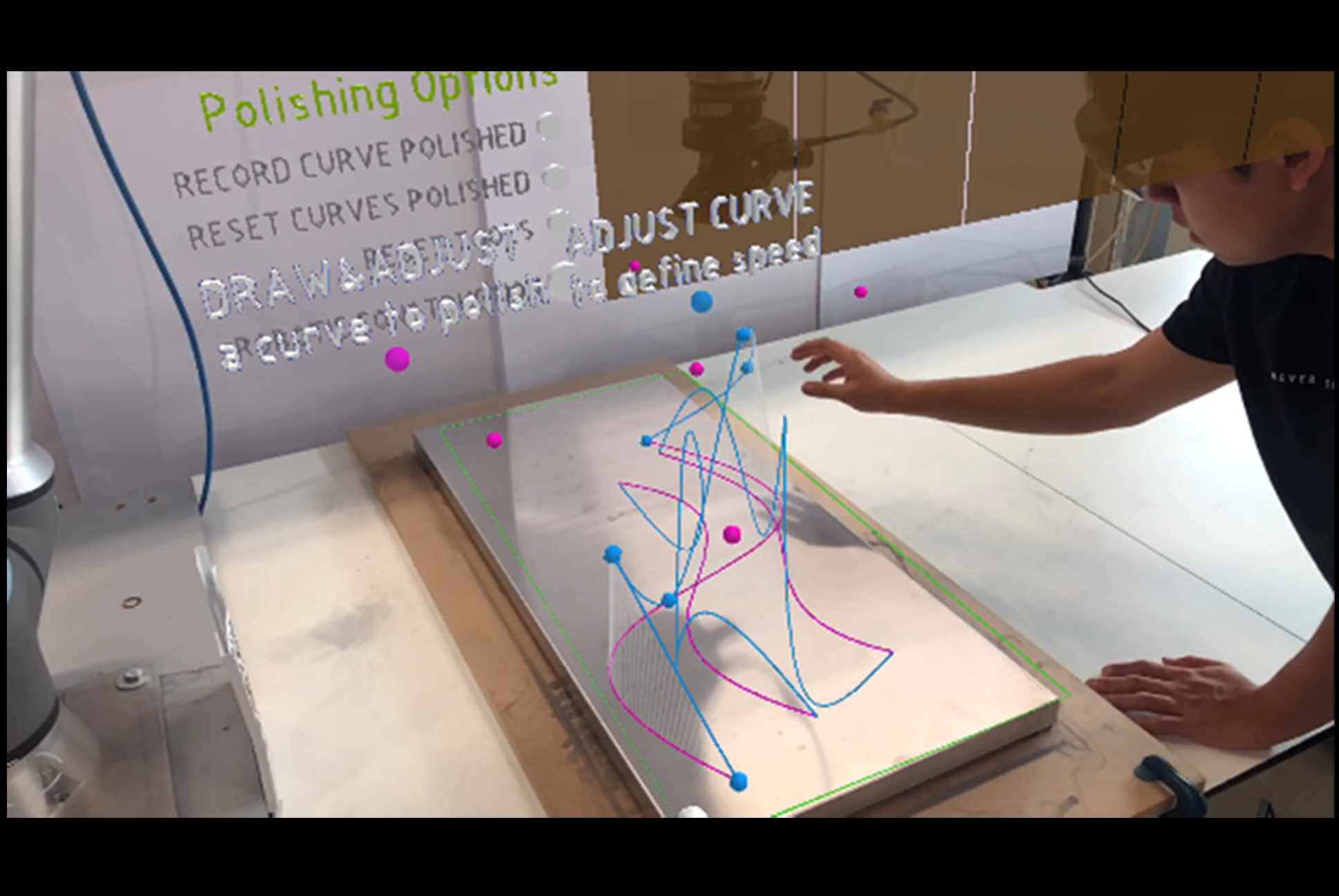

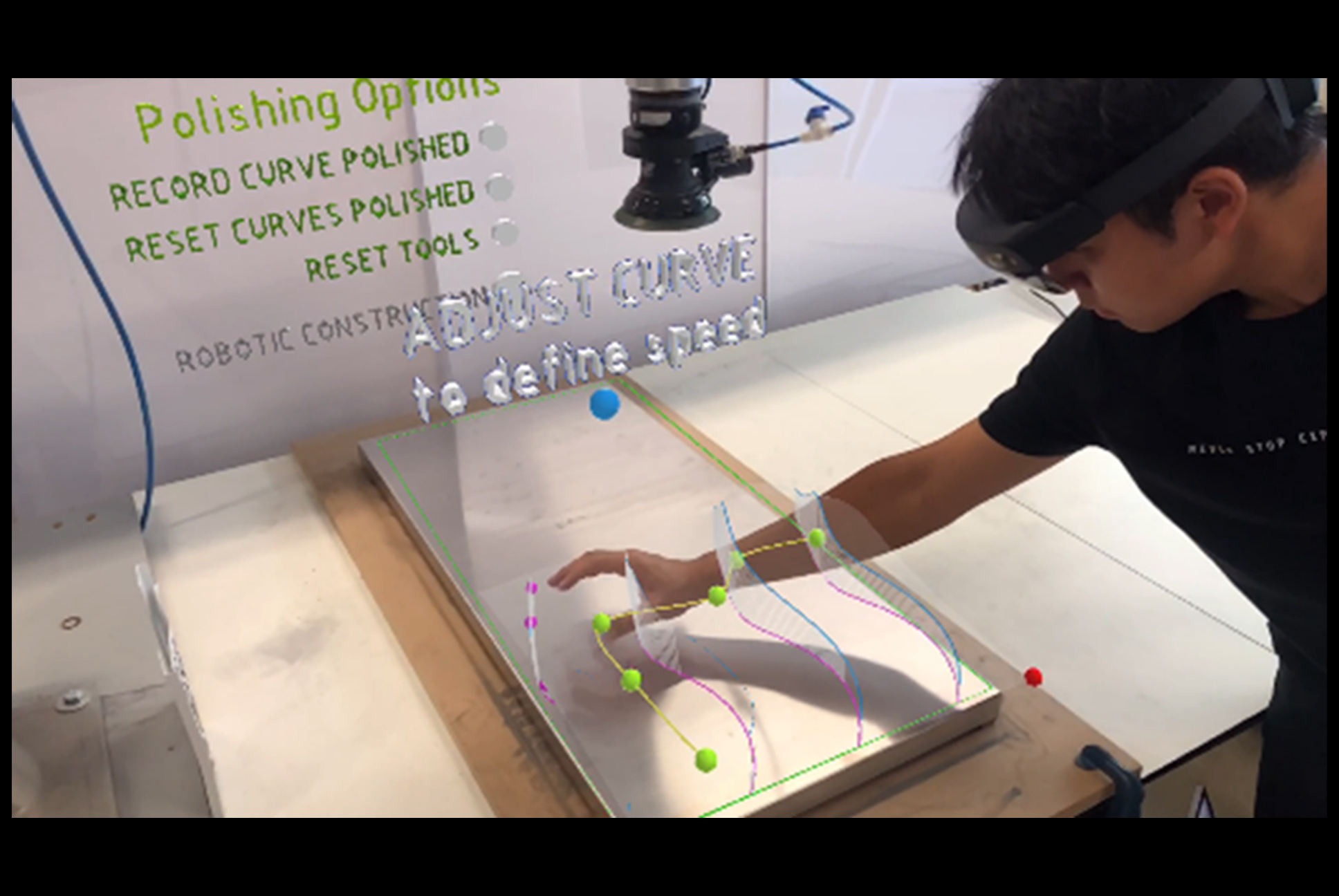

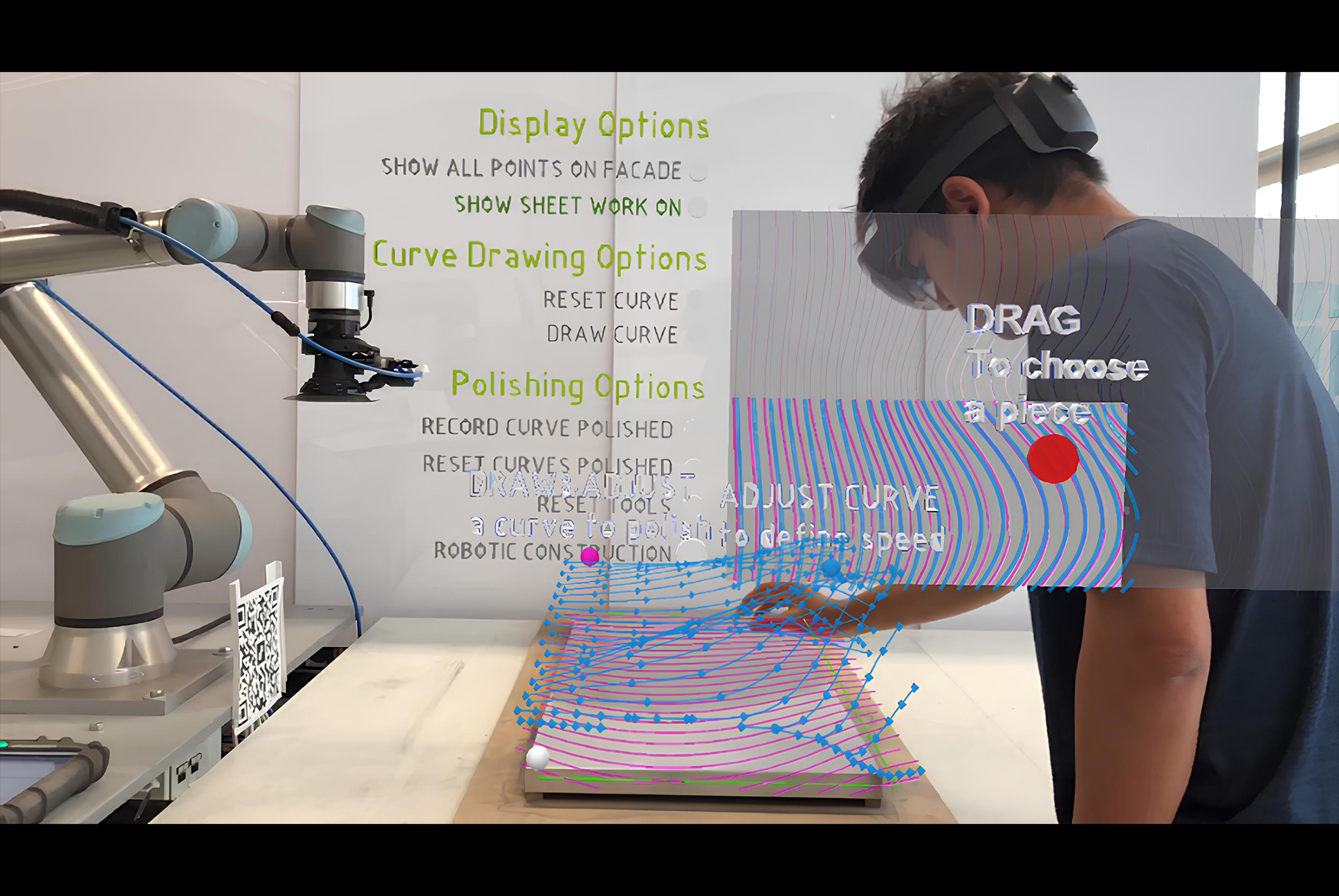

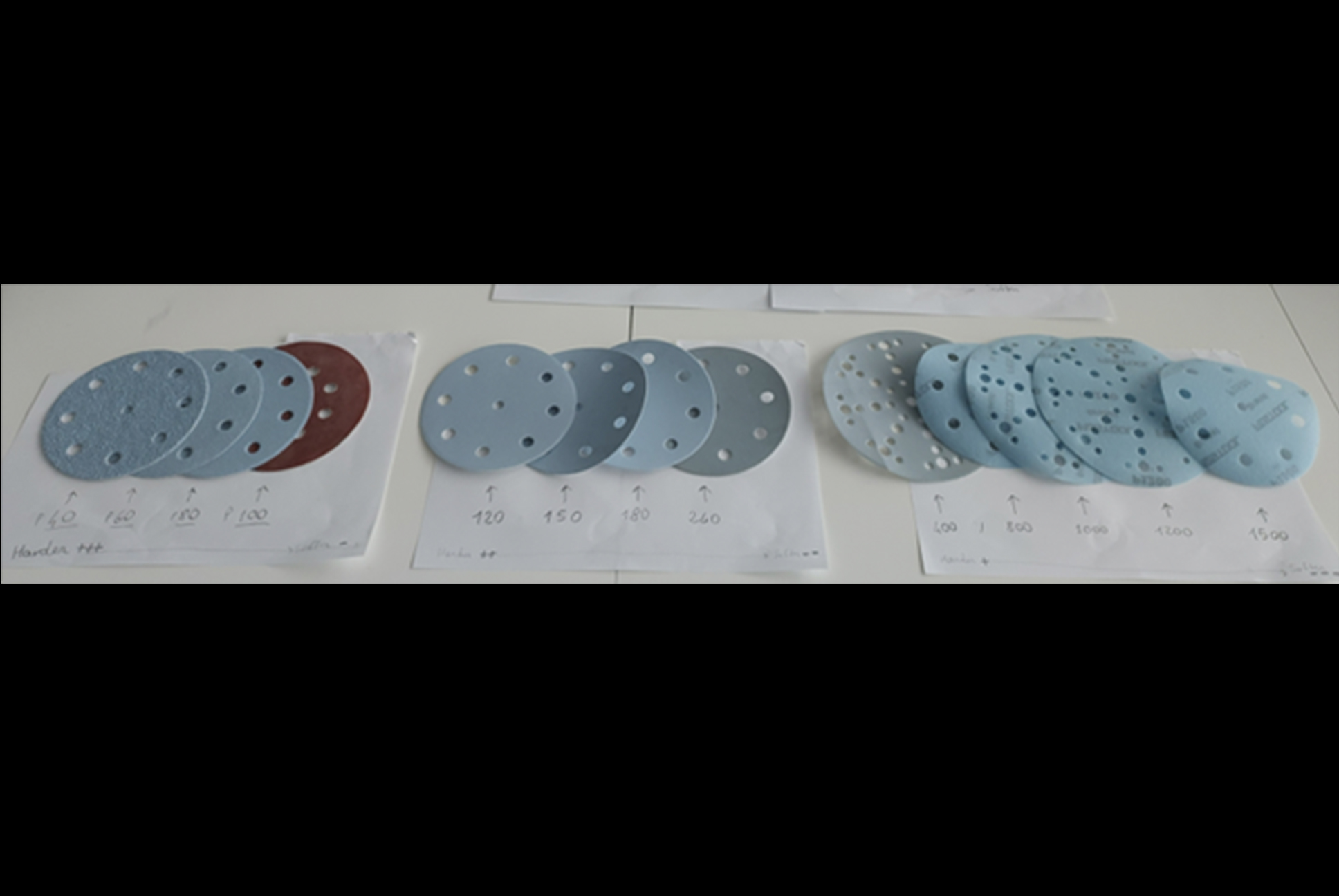

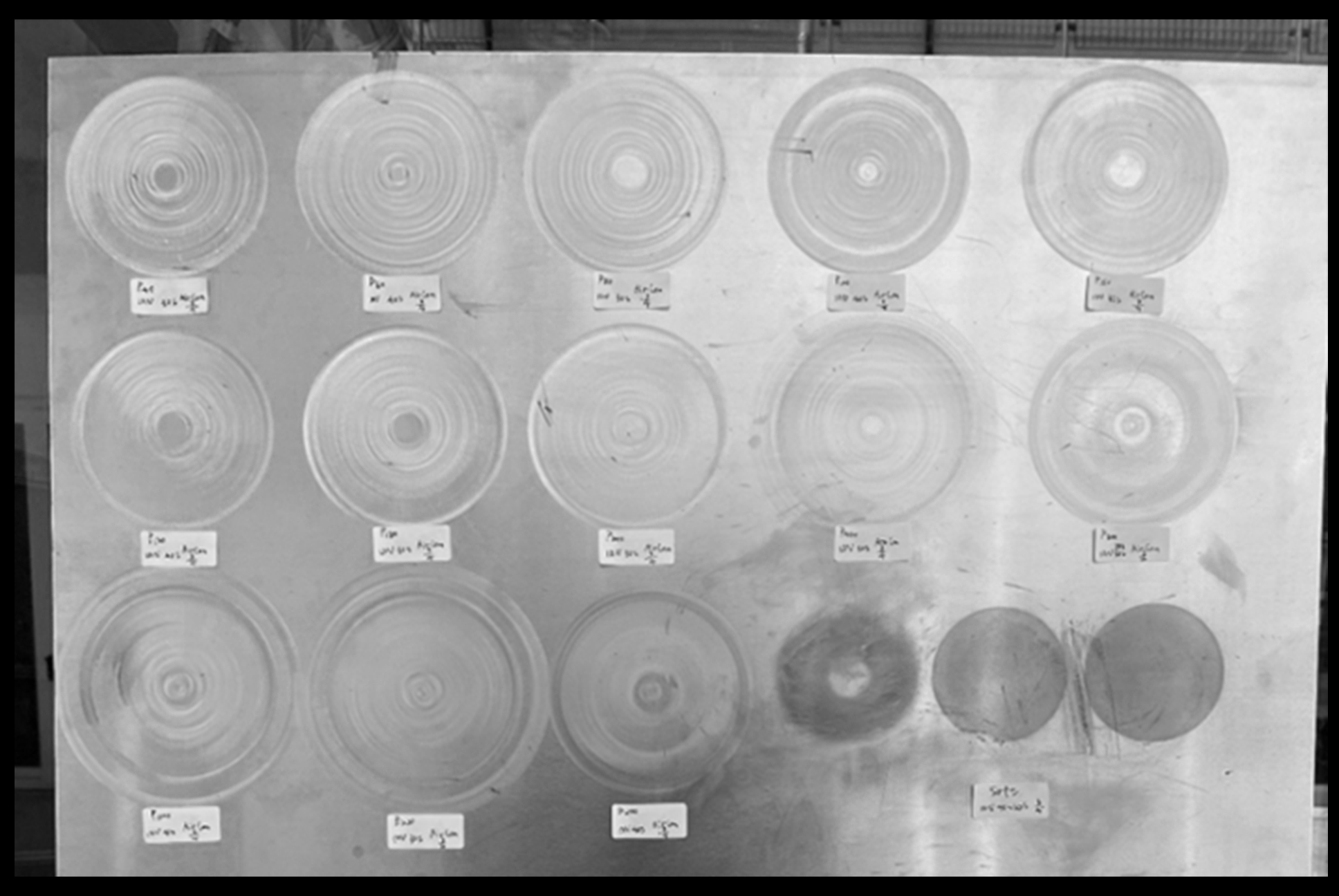

The proposed system integrates four main stages: localization, filter selection, design and adjustment, and robotic machining. A head-mounted AR device (HoloLens 2) is used to map the work area, track user gestures, and provide interactive 3D feedback through virtual buttons. Gestures are translated into 3D paths using Grasshopper and Fologram, while parametric filters shape the interpretation of these paths into robot motion commands. The robot, equipped with a pneumatic grinding tool and sandpaper ranging from coarse (P50) to fine (P1500), follows the generated trajectory, adjusting speed and force based on gesture-derived data. Several filter types - dot matrix, straight grid, curve disturbance - support different visual and textural outcomes.

The system has been tested on a 1×3 meter aluminum surface, successfully generating patterned outputs with controlled spacing and variation. Real-time feedback through AR improves design iteration, while gesture-based interaction lowers the technical barrier for programming robotic motion. Users with minimal experience in digital fabrication can design and execute surface treatments, although expertise enhances control over complex filters. The output patterns reflect both robotic precision and user-driven irregularity. While AR projection accuracy introduces minor deviations, the resulting artifacts demonstrate the feasibility of intuitive robot programming for aesthetic and functional surface modification.

space

MATERIALS

Aluminum | Digital Materials

PROCESSES

Robotic Grinding | Augmented Reality

space

ROLES

.

STAKEHOLDERS

LOCATION

.

YEAR

2023

space